I had something of an epiphany in the shower this morning. I discovered that I actually agreed with Bradley Kuhn about something.

TLDR: Is “the cloud” a threat to Open Source? I stopped working on an Open Source calendaring project because of Google Calendars.

Several months ago I attended (part of) a talk by Bradley about how The Cloud (whatever that is) is a threat to Free Software. (Yes, I know what The Cloud is. Snarky remark in reference to all the different things The Cloud might mean to various people. See Simon Wardley’s wonderful talk about what the cloud is.)

His reasons struck me as so outside of my way of thinking about software that I ended up leaving the talk. Oh, also, Skippy wanted to go to lunch, and that sounded like a lot more fun. Nothing personal, Bradley. He was talking about how something like Google Calendar (actually his example was GMail, but hold on a minute) was a threat to Free Software because the code, even though it’s in Javascript and right there in front of you, can’t really be inspected (ie, you can’t learn from it) because it’s hugely obfuscated. Also, you can’t see the back end. So here’s a service you can use for “free”, but it’s not Free, because it’s in chains, metaphorically speaking.

Then, this morning, I was thinking about why people are involved in Free/Open Source software, but also why they stop being involved, and I realized something.

I used to have a web-based calendar thingy. It was written in Perl, and it was really very cool. In fact, it not only started my passion for Open Source (it was the first thing I ever had on CPAN, and it was the first software that I ever wrote which was featured in a book!) it also paid my mortgage for a few years. I used to write calendaring applications for the General Motors Desert Proving Grounds in Mesa, Arizona. Although that plant is long closed, their scheduling ran on my software. If you wanted to schedule a test on the dust track (tests a vehicles various rubber seals to make sure they keep out dust, as well as handling in those conditions) you used the web-based scheduling application, called D.U.S.T. (I forget what it stands for – Dusttrack Usage Scheduling Tool or something) and scheduled it. This worked better than grubby bits of paper, because it didn’t get lost, and you always could get to it without walking down the hallway.

Also, when I was at Databeam, back in the late 90s, I wrote a similar application for scheduling conference rooms (clever name: Conference Rooms). I went up to the front desk one day and stole the conference room scheduling book and hid it, forcing everyone to use the online scheduling app. Strangely, it worked, and I didn’t get fired.

Then, I got involved in a project called Reefknot, which was an implementation of various international calendaring standards, in Perl. That was humming along nicely. And I had a dozen different calendar modules on CPAN.

By the way, in case you don’t know, calendaring is hard. Sure, it looks easy, but then you get into things like “every other Monday at 10am, except during company vacations.” Or possibly “the last day of each month.” Think for a little while about how you’d implement that, and your brain will start to melt just a little. “every monday” suggests a simple solution, but as soon as you start having to deal with exceptions, things get very very complicated. And what with different length months and leap years … and don’t even get me started on time zones. *shudder*

Anyways, then something called Google Calendar came along. It worked with all of the various calendaring applications. It did the various calendaring specifications, including the long-elusive CalDav. We were all very excited in the calendaring community, but then an odd thing happened. People stopped working on calendaring stuff. Because, you know, it’s already done.

So, I stopped working on an Open Source project because there was an implementation in the cloud. (ie, online somewhere.)

So, was Bradley right? Did Google Calendar kill the Reefknot project specifically because it’s closed source? Yes, in a sense. I don’t believe, as the FSF does, that closed source is intrinsically immoral. But there’s a direct correlation between the projects I no longer work on, and great cloud based implementations of the same functionality, where I don’t have access to the source to participate.

Furthermore, as my interaction with software is increasingly via a browser, and not via running software on my own computer, I have less and less incentive – and ability – to tinker with those things.

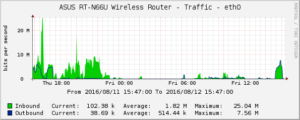

Now, I’m weird, I still run several of my own servers. Granted, those servers are “in the cloud”, meaning that I have no idea where they are physically located. But I have root on them. I build software from source on them, and tinker with that source from time to time. I tinker with the source code of my blog, even though there’s a good blogging platform “in the cloud”, but I also have several blogs on Blogger, simply because it’s simple and I don’t want to monkey with it.

So, although I disagree with Bradley’s philosophically, I find that he may be completely right for more pragmatic reasons.

But at the same time, Open Source has a whole new rebirth of late, and there continue to be ever more exciting projects out there. I’m much more concerned about my kids, and what they will find to hack on. My son is a hacker. He likes to build stuff, take stuff apart, break it and fix it, figure out how it works. I don’t know if I’m doing an adequate job of encouraging this. I really need to get him a subscription to Make magazine. I wonder, however, when he gets a little older, if he’ll be interested in programming. I think he’d be really good at it, but it would be a great shame if the removal of applications to The Cloud also results in a lack of opportunities to hack on code.

This Christmas, one friend did a treasure hunt for her kids in the Minecraft world, which was incredibly cool. I’ve seen them on the server a number of times since then.

This Christmas, one friend did a treasure hunt for her kids in the Minecraft world, which was incredibly cool. I’ve seen them on the server a number of times since then.